8 Universal State Machine

In the previous sections, we explored the theoretical underpinnings of computation through the lens of Turing Machines, ITTMs, and the current paradigm of DL. We illustrated how classical DL architectures, such as Transformers, struggle with fundamental efficiency and interpretability limits. Their reliance on massive parameter counts, complex training processes, and resource-hungry inference leads to diminishing returns as models grow ever larger. While these architectures have achieved remarkable capabilities, their approach to intelligence — as scaling “more of the same” — faces steep practical and theoretical hurdles.

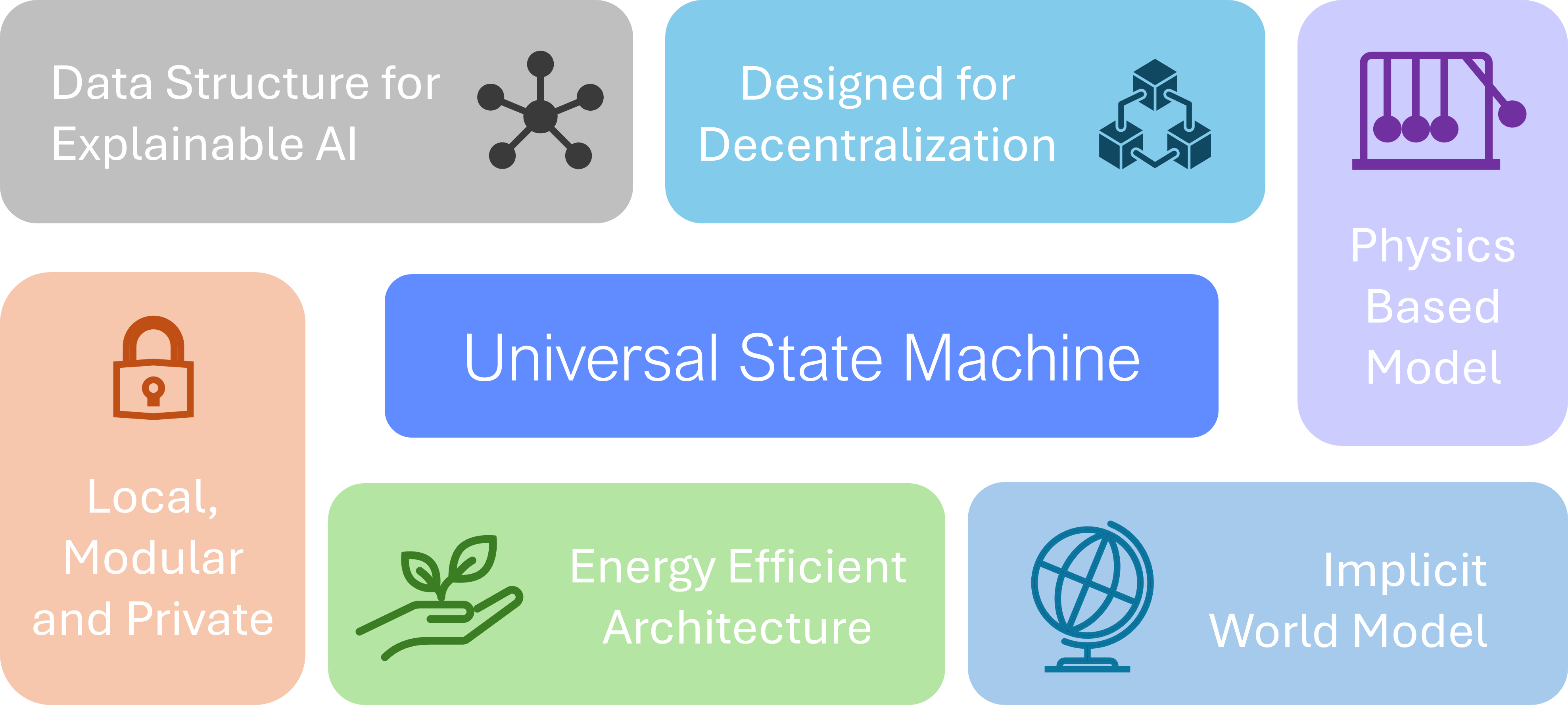

This final section introduces a radically new computational architecture: the Universal State Machine (USM). Designed from the ground up as a true alternative to DL, the USM draws directly from the insights and formalisms offered by ITTMs. Rather than continuing down the path of brute-force scaling or more intricate attention mechanisms, the USM represents a fundamental shift, providing a blueprint for a private, modular, and energy-efficient computation framework for artificial general intelligence.

8.1 Key Properties of the USM

The USM abandons the notion of massive static parameter sets and the rigid hyperparameter choices of today’s neural networks. Instead, it is a non-parametric computational architecture, dynamically adjusting its internal structure as it processes data. At its core lies a computationally queryable knowledge graph, which grows and refines itself online. Instead of painstaking off-line “training” that fixes a model’s capacity in advance, the USM continuously learns and reorganizes its representations on-the-fly. This allows it to handle arbitrary input scales and complexities without the runaway computational or data demands that plague contemporary deep models.

Unlike Transformers and other DL architectures, which often require GPUs and massive parallelization, the USM requires no such specialized hardware. Its inference is efficient, logarithmically honing in on the correct computational path rather than exploding in complexity with sequence length. This greatly reduces resource costs and ensures scalability without sacrificing performance. Additionally, the USM can be interpreted as an energy-based model with an implicit world model embedded in its evolving data structures.

The USM’s approach also enables privacy and modularity at its core. Instead of locking knowledge into opaque, unchangeable weight matrices, the USM maintains structured, interpretable states. USM shards can be added, removed, or combined without retraining from scratch, making it possible to compose and decompose intelligence safely and transparently. Just as importantly, the USM’s design reframes “training” as a form of calibration, dynamically adjusting its internal knowledge structures in response to new data. This is a true decentralized online learning paradigm, where the model continuously refines its world model with each new observation.

8.2 Architecture and Components

The USM represents a fundamentally different approach to machine intelligence, integrating both symbolic and sub-symbolic computation into a unified computational model. Unlike traditional DL models, which rely on static architectures and predefined parameter limits, the USM is a dynamic, self-modifying computational system. At its core, it maintains a structured, queryable knowledge graph that continuously evolves in response to new data, ensuring scalability, interpretability, and efficiency.

The USM operates through a dual-representation framework:

- Symbolic Representation: A structured knowledge graph encodes logical relationships, enabling transparent reasoning and explicit knowledge retrieval.

- Sub-Symbolic Computation: A continuous, adaptive process refines representations in real-time, allowing flexible generalization without fixed parameter constraints.

This hybrid structure allows the USM to function as a fully interpretable computational system while maintaining the adaptive learning capabilities of DL models. This design ensures both privacy and efficiency: sensitive or specialized modules can be isolated, while shared knowledge remains accessible through the USM’s query interfaces. At the same time, the computations remains interpretable, since the USM’s dual representation naturally exposes the state transitions that govern its decisions.

Several core innovations enable the USM to fully approximate the functionality of an ITTM, enabling computation at transfinite time steps:

- Lattice Compilation Algorithm: An algorithm for creating USM data structures from arbitrary symbolic data sources, enabling seamless integration of external knowledge into the USM computational framework.

- Voyager Calibration Algorithm: A novel optimization mechanism that replaces traditional gradient-based learning, allowing the USM to achieve convergence exponentially faster than backpropagation-based models.

- Electron Synthesis Algorithm: A state transition mechanism that generalizes the function of attention in Transformer models while providing a quadratic speedup in information routing across the knowledge USM.

By leveraging these mechanisms, the USM achieves a level of flexibility, interpretability, and computational efficiency that surpasses conventional DL approaches. Its structure is inherently modular, allowing seamless composition and integration of knowledge without costly retraining. The USM is designed to be decentralized, enabling local adaptation while preserving global coherence across different contexts and applications.

8.3 Performance Characteristics

By leveraging an evolving dual-representation architecture instead of massive, static parameters, the USM achieves significant improvements in both training and inference efficiency. Rather than ballooning in size to accommodate new tasks, the knowledge graph expands only where needed, leading to a computational footprint that remains manageable even as complexity grows. This modular structure also allows for near-logarithmic query and update operations, circumventing the quadratic scaling issues typical of self-attention.

The USM redefines the efficiency of artificial intelligence systems by fundamentally altering how computational resources are utilized. Traditional DL models, particularly Transformers, exhibit an \(O(N^2)\) complexity in information routing due to their reliance on global attention mechanisms. In contrast, the USM’s Electron Synthesis Algorithm optimizes information flow with a quadratic speedup, reducing computational overhead while preserving contextual accuracy.

Additionally, Voyager Calibration enables an unprecedented acceleration in learning. Unlike stochastic gradient descent (SGD), which relies on incremental updates and local optimization steps, Voyager Calibration operates on a transfinite scale, rapidly aligning the internal knowledge graph to optimal states. This results in:

- Exponential Reduction in Training Time: Learning processes that previously required weeks now execute in minutes.

- Super-Linear Scalability: The computational cost remains logarithmic relative to data volume, ensuring efficient scaling even as knowledge structures expand.

- Significant Gains in Optimality: The USM operates under fundamentally better convergence properties than neural networks, leading to orders-of-magnitude improvements in token processing rates.

Training times that once spanned weeks are now compressed to minutes or hours, thanks to the USM’s acceleration-based calibration process. This process quickly converges on optimal or near-optimal internal configurations without the exhaustive iteration cycles demanded by conventional deep networks. During inference, the Electron Synthesis mechanism routes information across the USM in an efficient manner, enabling response generation rates at scales previously unattainable by mainstream DL models.

Beyond performance advantages, the USM introduces new paradigms in privacy, adaptability, and modularity:

- Privacy-Preserving Intelligence: Unlike conventional models that embed learned information in dense weight matrices, the USM maintains an explicit, interpretable knowledge structure that can be queried and updated without full retraining.

- Real-Time Adaptation: The USM is capable of learning continuously, refining its internal representations dynamically instead of relying on fixed, pre-trained parameters.

- Composable Computational Substrate: Components, or shards, of the USM can be independently modified and recombined, facilitating knowledge transfer without catastrophic forgetting.

These advances bring profound implications for real-world applications. Systems that once needed dedicated supercomputing resources can now run on off-the-shelf hardware, drastically reducing operational costs. Moreover, the USM’s ability to integrate symbolic reasoning with continuous learning fosters robustness and explainability—key factors for critical domains like healthcare, finance, and autonomous systems. In essence, the USM paves the way for a more scalable, transparent, and energy-conscious form of machine intelligence, one capable of supporting the next generation of artificial general intelligence.

Through these innovations, the USM establishes a new benchmark for computational intelligence. Rather than merely improving upon existing DL techniques, it offers a fundamentally different pathway toward scalable, interpretable, and resource-efficient machine intelligence, one capable of supporting the next generation artificial general intelligence systems.

8.4 A New Era for Artificial General Intelligence

By bridging the gap between the first-principles elegance of ITTMs and the requirements of practical AI systems, the Universal State Machine offers a path forward beyond incremental DL improvements. It promises a future where general intelligence can be achieved without the untenable scaling costs, interpretability challenges, and rigid architectures of today’s DL giants.

In sum, the USM redefines the building blocks of machine intelligence. It is not just another layer atop the current paradigm—rather, it returns to the theoretical foundations, forging a new computational substrate for AI. With the USM, we open the door to an era where intelligence is modular, adaptable, private, energy-efficient, and truly universal.